Why AI Belongs in AR Mobile Apps

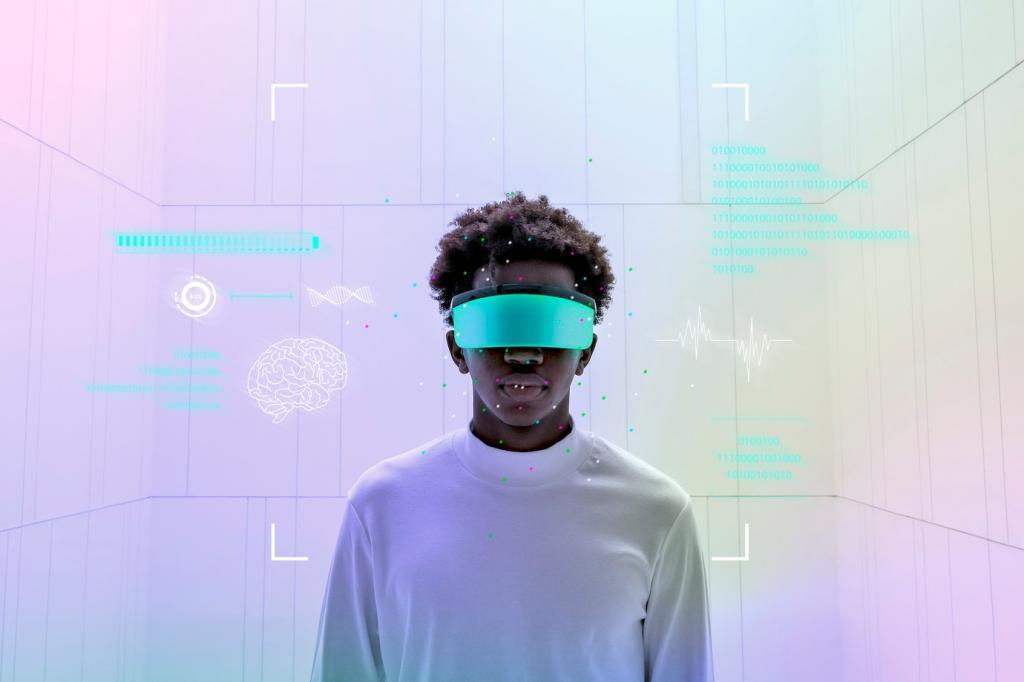

With on-device semantic segmentation and object recognition, AR can respect depth, surfaces, and boundaries, letting content sit naturally in your environment. A museum app we tested identified sculptures instantly, revealing invisible stories beside each piece. What would your app explain in context?

Why AI Belongs in AR Mobile Apps

AI tailors AR overlays to each user’s goals, location, and habits without feeling creepy. Lightweight on-device learning nudges recommendations while respecting privacy. Imagine a home-improvement AR guide adapting tooltips to your skill level after observing a few sessions. Would your users prefer proactive or reactive hints?